What is K-Nearest Neighbor (K-NN)? - Definition from.

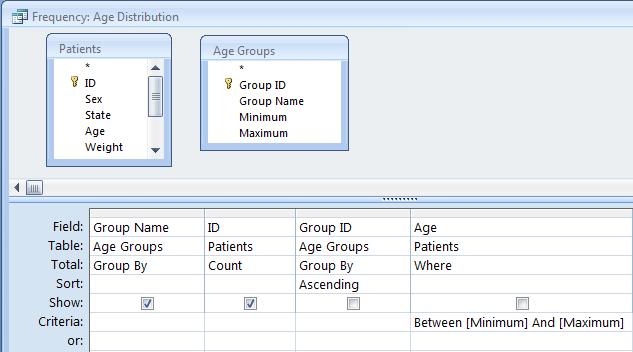

The K-Nearest Neighbors algorithm can be used for classification and regression. Though, here we'll focus for the time being on using it for classification. k-NN classifiers are an example of what's called instance based or memory based supervised learning. What this means is that instance based learning methods work by memorizing the labeled examples that they see in the training set. And.K Nearest Neighbors - Classification: K nearest neighbors is a simple algorithm that stores all available cases and classifies new cases based on a similarity measure (e.g., distance functions). KNN has been used in statistical estimation and pattern recognition already in the beginning of 1970’s as a non-parametric technique. Algorithm: A case is classified by a majority vote of its.This is the parameter k in the k-Nearest Neighbor algorithm. If the number of observations (rows) is less than 50, the value of k should be between 1 and the total number of observations (rows). If the number of rows is greater than 50, the value of k should be between 1 and 50. Note that if k is chosen as the total number of observations in the Training Set, all the observations in the.

Among the various methods of supervised statistical pattern recognition. the sign of that point then determines the classification of the sample. The k-NN classifier extends this idea by taking the k nearest points and assigning the sign of the majority. It is common to select k small and odd to break ties (typically 1, 3 or 5). Larger k values help reduce the effects of noisy points within.ClassificationKNN is a nearest-neighbor classification model in which you can alter both the distance metric and the number of nearest neighbors. Because a ClassificationKNN classifier stores training data, you can use the model to compute resubstitution predictions. Alternatively, use the model to classify new observations using the predict method.

K- Nearest Neighbors or also known as K-NN belong to the family of supervised machine learning algorithms which means we use labeled (Target Variable) dataset to predict the class of new data point. The K-NN algorithm is a robust classifier which is often used as a benchmark for more complex classifiers such as Artificial Neural Network (ANN) or Support vector machine (SVM). Below is the list.